Data Engineer Pet Project Ideas: from Beginners to Advanced Level

The author of this article is tech expert Pieter Murphy.

In the rapidly evolving world of technology, data has become the cornerstone of decision-making and innovation. As a data engineer, honing your skills through practical experience is essential to stay ahead in the game, and data engineer pet projects are the perfect way to enhance skills.

Whether you're just starting your journey or looking to challenge your advanced skills, pet projects are a fantastic way to learn, innovate, and even contribute to the community. From beginner-friendly projects that lay a solid foundation in data manipulation and storage to advanced undertakings that push the boundaries of data processing and analytics, there are options for everyone. Here are pet projects for data engineers to try.

Data Engineering Project Ideas for Beginners

When you’re new to data engineering, starting with projects that focus on the fundamentals is generally the best choice. It allows you to hone critical capabilities without getting overwhelmed, making the learning process easier.

Additionally, beginner projects are ideal for exploring whether becoming a data engineer or similar professional is genuinely the best for you. As a result, if you’re considering moving into the field but want to ensure it’s a solid fit first, they’re the perfect way to find out.

Here are some beginner-friendly projects to try out.

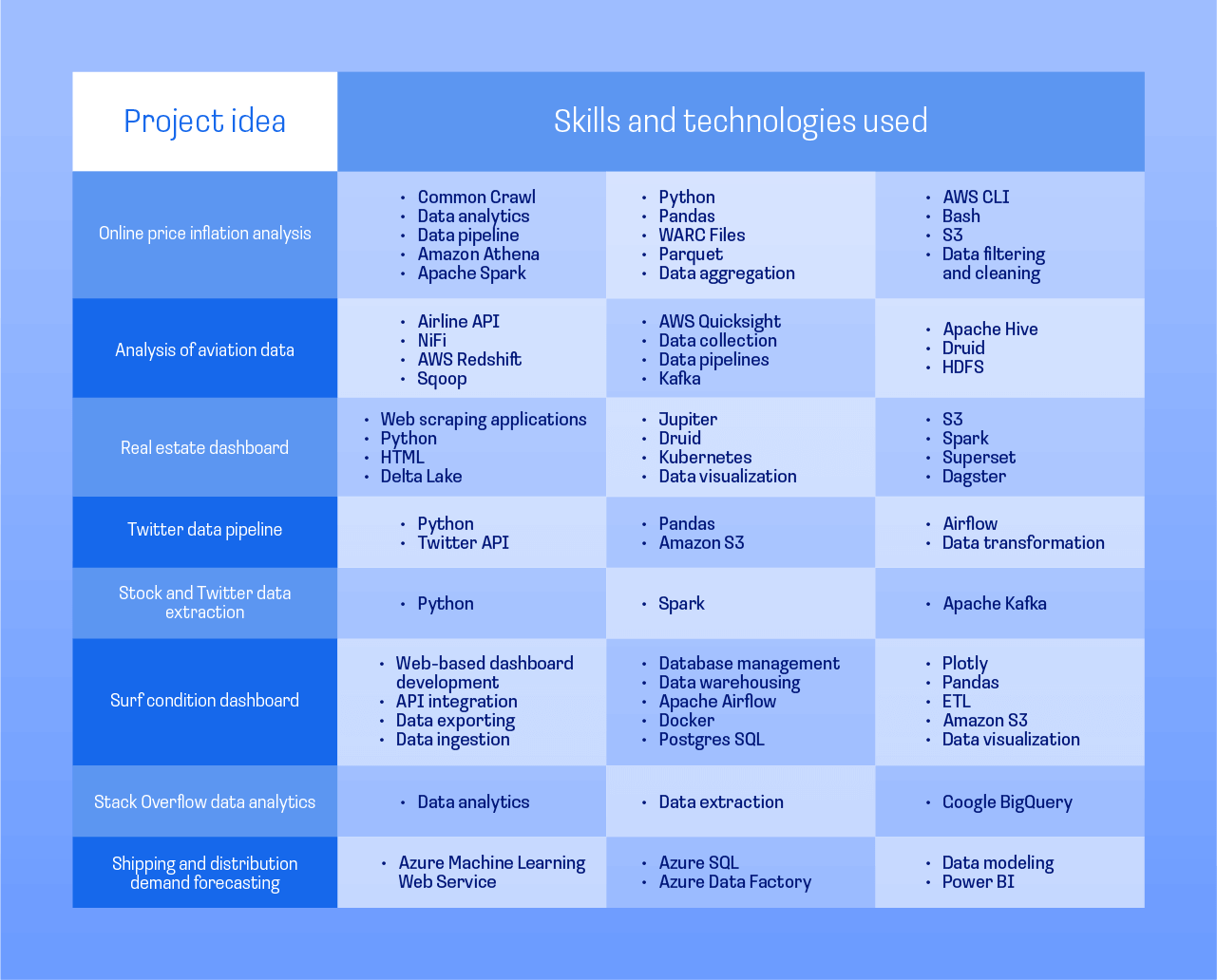

Online Price Inflation Analysis

With inflation being problematic in many parts of the world, this is one of the more timely data engineer beginner projects to consider. It focuses on gathering inflation data and calculating inflation rates by analyzing price shifts of goods and services sold online.

The results show how pricing has changed over time, providing intriguing look into the world of price inflation. Since that’s the case, it’s easy to stay motivated throughout the project, particularly if you’re also curious about economics.

While this project is beginner-friendly, it does involve a variety of skills and technologies. That makes it a strong choice for those new to the field who want data engineer portfolio projects to boost their resumes.

Skills and technologies used:

- Common Crawl

- Data analytics

- Data pipeline

- Amazon Athena

- Apache Spark

- AWS CLI

- Bash

- Python

- Pandas

- WARC Files

- Parquet

- Data aggregation

- Data filtering and cleaning

- S3

Source code: Online Price Inflation Analysis

Analysis of Aviation Data

Aviation data is plentiful, which is why an aviation data analysis project is an excellent option. This specific one is also one of the ideal open-source data engineering projects for beginners since it relies on skills that can help those looking to launch their careers.

It teaches you how to get data from a streaming API. Additionally, it includes data cleansing, transformation, and visualization. There are also several technologies involved, making it an ideal way to boost fundamental skills using a real-world approach.

Skills and technologies used:

- Airline API

- NiFi

- AWS Redshift

- Sqoop

- Apache Hive

- Druid

- AWS Quicksight

- Data collection

- Data pipelines

- Kafka

- HDFS

Source code: Analysis of Aviation Data

Real Estate Dashboard

If you’re looking for the best data engineering projects with source code, this one is excellent for those new to the field who are looking for a quick and free mini project option. It uses web scraping to scrape real estate information for a data warehouse. You’ll also create a dashboard for visualization.

While that description makes it all sound simple, you’ll harness a variety of skills and technologies along the way. As a result, this is a strong option for beginner data engineer projects for resume additions, first portfolios, or straightforward skill-building.

Another benefit is that this project can be adjusted to target local real estate data. Since that’s the case, it can offer a side benefit of helping you find options within your price range if you happen to be seeking out a new home.

Skills and technologies used:

- Web scraping applications (like Beautiful Soup and Scrapy)

- Python

- HTML

- Delta Lake

- S3

- Spark

- Jupiter

- Druid

- Kubernetes

- Data visualization

- Superset

- Dagster

Source code: Real Estate Dashboard

Twitter Data Pipeline

For data engineer python projects suitable for beginners, this one is a solid choice. You’ll gather new data from the Twitter API and harness Python, Pandas, and Airflow, ultimately saving the final results in Amazon S3.

The benefit of this project is that it’s accompanied by a video, making it simpler to follow than some other options. As a result, it’s an excellent choice for those new to data pipeline creation who prefer a more visual approach to learning.

Skills and technologies used:

- Python

- Twitter API

- Airflow

- Pandas

- Amazon S3

- Data transformation

Source code: Twitter Data Pipeline

Stock and Twitter Data Extraction

If you’re curious about how public sentiment can influence the stock market or simply want to hone your data extraction skills, this project is a solid choice. It relies on information extracted from the social media site Twitter and the stock market and assesses user sentiment through a big data pipeline.

This option is an excellent choice for anyone interested in real-time data engineering projects for beginners, as well as anyone who wants to improve their Python, Kafka, and Spark capabilities. Plus, it can provide some fascinating insights into the world of stocks and how public sentiment makes a difference, making it easier to see how the GameStop stock drama unfolded.

Skills and technologies used:

- Python

- Apache Kafka

- Spark

Source code: Stock and Twitter Data Extraction

Surf Condition Dashboard

A solid choice for beginners, this is one of the data engineer open-source projects that taps into a lot of skill areas. It develops a data pipeline that collects information from the Surfline API to create a functional dashboard.

e reason this project is an excellent choice for those new to the field is the overview is incredibly straightforward. Plus, with the variety of skills used, this can make an ideal addition to a portfolio. You’ll dive into API integration, data ingestion, data warehousing, and much more, allowing you to build fundamental capabilities that are critical at the start of your career.

Skills and technologies used:

- Web-based dashboard development

- API integration

- Data exporting

- Data ingestion

- Database management

- Data warehousing

- Apache Airflow

- Docker

- Postgres SQL

- Plotly

- Pandas

- ETL

- Amazon S3

- Data visualization

Source code: Surf Condition Dashboard

Stack Overflow Data Analytics

Stack Overflow is home to millions of open-source projects, which makes it a valuable resource for data analytics projects that require larger datasets. You can harness BigQuery data to conduct research in a variety of directions, making this project an excellent choice for honing data analysis skills.

Plus, this project may help you see the potential of other publicly available datasets. In turn, it may inspire additional projects that can help you hone critical skills or build a new addition to your professional portfolio.

Skills and technologies used:

- Data analytics

- Data extraction

- Google BigQuery

Source code: Stack Overflow Data Analytics

Shipping and Distribution Demand Forecasting

This shipping and distribution demand forecasting project is an excellent option for anyone seeking data engineering beginner projects. It harnesses historical demand data to anticipate future needs, allowing logistics companies to plan more effectively.

While the project is focused on shipping and distribution, the concepts apply to many other industries and functional areas. As a result, this is a solid choice for building critical expertise that provides employers with exceptional value.

Plus, you’ll use a variety of foundational skills along the way, as well as tap into some intermediate capabilities. SQL, machine learning, Azure Data Factory, and more are involved, though the project is still simple enough for those new to the field.

Skills and technologies used:

- Azure SQL

- Azure Machine Learning Web Service

- Azure Data Factory

- Data modeling

- Power BI

Source code: Shipping and Distribution Demand Forecasting

Intermediate-level Data Engineer Projects for Resume

With intermediate-level projects, you usually need a well-developed skillset and familiarity with many of the programming languages, tools, and technologies used for data engineering. However, you also want to find projects that introduce a degree of challenge, ensuring you grow professionally along the way.

It’s also wise to find options that are strong additions to a mid-level resume, CV, or portfolio. By doing so, you can increase your odds of landing job interviews or otherwise advancing in your career.

Here are some projects to check out.

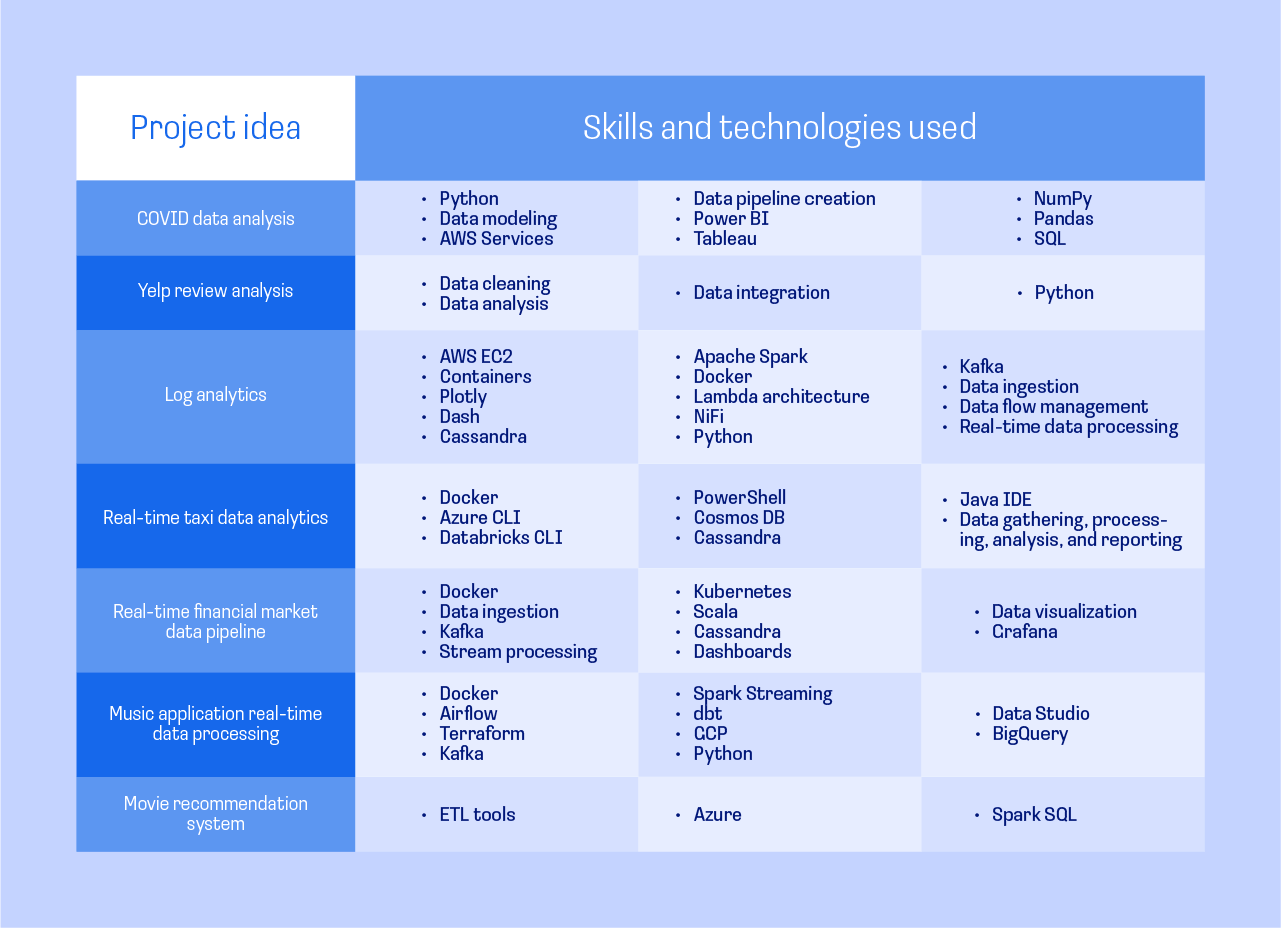

COVID Data Analysis

For data engineering portfolio projects, this COVID data analysis one is a solid choice. You’ll harness data using a variety of technologies, tools, and platforms, including Power BI, Python, SQL, AWS, Pandas, NumPy, Tableau, and more. Plus, the insights are incredibly intriguing, particularly with COVID still being an issue today.

One reason this project is worth exploring is it has a video series to serve as a walkthrough, and it’s well broken down, making following along incredibly straightforward. That makes the learning process far more visual, which is helpful in many cases. Plus, with how the guide is designed, it’s easy to step away and come back to your project later.

Skills and technologies used:

- Python

- Data modeling

- AWS Services

- Data pipeline creation

- Power BI

- Tableau

- NumPy

- Pandas

- SQL

Source code: COVID Data Analysis

Yelp Review Analysis

On Yelp, users can rate and review a variety of businesses, making the site an excellent resource for anyone who wants to do a personal project using Python with one of the larger public data sources around. This project gathers information about seven cuisine types for analysis. Essentially, it harnesses customer reviews to provide insight and make recommendations designed to help a restaurant grow and thrive.

One of the reasons this is a strong choice among data engineering projects for resume is that it concentrates on big data. Plus, the focus on sentiment analysis has many applications, particularly for companies that want to use data-driven insights and knowledge to enhance their standing. Additionally, it uses information from the real world, as the data includes genuine Yelp user data.

Skills and technologies used:

- Data cleaning

- Data analysis

- Data integration

- Python

Source code: Yelp Review Analysis

Log Analytics

For a project using Python, Kafka, and many other still areas, this log analytics project isn’t just one of the good data engineering projects for portfolio, it’s an excellent choice. It involves real-time streaming as part of the data flow, focusing on information provided by NASA.

Knowing how to analyze logs is a valuable skill and one that may be asked about during an interview. With log analysis, companies can identify patterns or anomalies, allowing the information from data streams to provide valuable insights or support cybersecurity strategies.

Skills and technologies used:

- AWS EC2

- Containers

- Plotly

- Dash

- Cassandra

- Apache Spark

- Docker

- Lamba architecture

- NiFi

- Python

- Kafka

- Data ingestion

- Data flow management

- Real-time data processing

Source code: Log Analytics

Real-Time Taxi Data Analytics

If you’ve got some experience in the field and are looking for data engineer real-time projects, this is an excellent option. It focuses on gathering taxi service data, including information about trips and customer payments, to provide the company with valuable insights.

With this data engineer end-to-end project, you essentially build the entire pipeline. It covers four full stages – ingest, process, store, and analyze and report – making it a comprehensive choice. Since the skills used apply to many other types of real-time analysis, it’s a solid choice for your portfolio, too.

Skills and technologies used:

- Docker

- Azure CLI

- Databricks CLI

- PowerShell

- Cosmos DB

- Cassandra

- Java IDE (JDK 1.8, Scala SDK 2.12, and Maven 3.6.3)

- Data gathering, processing, analysis, and reporting

Source code: Real-Time Taxi Data Analytics

Real-Time Financial Market Data Pipeline

Another of the intermediate-level data engineer portfolio examples worth exploring is this Finnhub API real-time financial market data pipeline project. It involves a streamlining data pipeline for real-time information. Additionally, it’s a multi-faceted project featuring data ingestion, stream processing, database management, and visualization. As a result, it’s a solid option for mid-career professionals.

During the project, you’ll harness skills, tools, and technologies like Kafka, Apache Spark, Kubernetes, and Cassandra. Plus, it even dives into Grafana, which is excellent if you want to explore it on your own.

Skills and technologies used:

- Docker

- Data ingestion

- Kafka

- Stream processing

- Kubernetes

- Scala

- Cassandra

- Dashboards

- Data visualization

- Grafana

Source code: Real-Time Financial Market Data Pipeline

Music Application Real-Time Data Processing

This project involves mock real-world data by generating stream event data and using Apache Spark, Airflow, Kafka, and a variety of skills and technologies. It mimics streaming data from platforms like Spotify or Pandora, creating opportunities for event data analysis that’s applicable in the real world. As a result, this is one of the stronger data engineering side projects for intermediate professionals.

Plus, it’s an excellent way to gain hands-on experience using Apache Airflow, Docker, and many other technologies. It also features real-time data processing and the use of data storage in a data lake. There’s also a data visualization element, as well as data warehousing with BigQuery, the Google Cloud platform data warehouse solution.

Skills and technologies used:

- Docker

- Airflow

- Terraform

- Kafka

- Spark Streaming

- dbt

- GCP

- Python

- Data Studio

- BigQuery

Source code: Music Application Real-Time Data Processing

Movie Recommendation System

For those interested in data engineering open-source projects that target intermediate professionals, this is a solid option. It taps into several Azure services, including Blob Storage, Data Lake, Data Brick, and Dashboard. Also, it utilizes extract, transform, and load (ETL) tools, which is valuable for any aspiring or mid-career data scientists or analysts.

With this data engineering end-to-end project, you’ll harness a robust data set from Movielens. It offers a large amount of data, allowing you to build a data pipeline for the project with ease. Plus, the results of the project provide intriguing insights, particularly if you’re a professional with a career in data engineering who’s a fan of film.

Skills and technologies used:

- ETL tools

- Azure

- Spark SQL

Source code: Movie Recommendation System

Advanced Data Engineering Projects for Portfolio

Advanced projects for data engineers usually require more robust skill sets. However, some may be less challenging but serve as solid ways to impress hiring managers or recruiters when added to a portfolio.

Additionally, projects that you can tweak or adjust to make them more advanced are solid options. With those, you can essentially take things beyond what’s initially presented, allowing you to put the best of what you have to offer on display.

Finally, many of these more advanced options have clear benefits when it comes to showcasing your value to an employer. They dive into concepts that are highly applicable to a variety of industries or scenarios and that can elevate your career.

Here are some advanced-level options to check out.

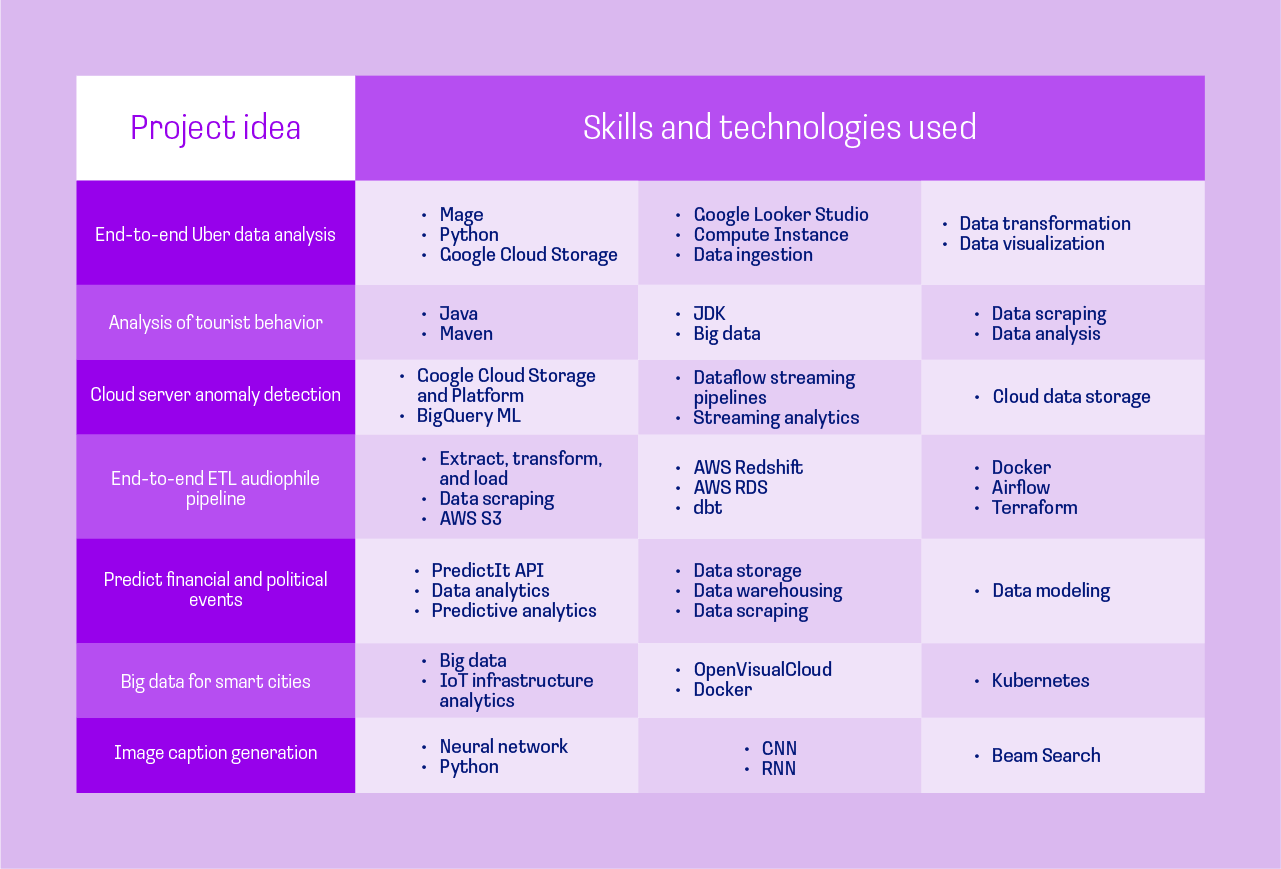

End-to-End Uber Data Analysis

For those interested in data used for problem-solving, this project is a solid way to go. You’ll be including data representing the activities of Uber, one of the leading rideshare companies. The information is then used for data analysis, allowing you to derive powerful insights. Plus, you’ll get to harness BigQuery data capabilities, which are highly sought after by employers.

With this end-to-end project, you get the benefit of a helpful video. It helps show the various steps and makes processes clearer. Plus, it taps into a variety of skill areas, which is why it’s a helpful addition to a portfolio.

Skills and technologies used:

- Mage

- Python

- Google Cloud Storage

- Google Looker Studio

- Compute Instance

- Data ingestion

- Data transformation

- Data visualization

Source code: End-to-End Uber Data Analysis

Analysis of Tourist Behavior

This is one of the projects for a data engineer portfolio that’s worth exploring, particularly for professionals who want to learn more about business intelligence and gain hands-on experience in an area that helps them generate valuable insights. The project focuses on tourist sentiment and preferences by researching their behavior.

Tourists classically use the internet to gather information, and scraping data from multiple sources can provide surprising amounts of valuable information.

Skills and technologies used:

- Java

- Maven

- JDK

- Big data

- Data scraping

- Data analysis

Source code: Analysis of Tourist Behavior

Cloud Server Anomaly Detection

With cloud servers being an increasingly critical part of the business operations landscape, data engineer practice projects that focus on anomaly detection are a wise choice for professionals trying to figure out how to build a data engineer portfolio that stands out. It allows you to perform data analysis engineering in a way that’s highly applicable to today’s employers. Plus, you can dive into various programming languages, and technologies and tools like Cloud AI Platform, BigQuery ML (machine learning), streaming pipelines, and more.

While the project is more complex than those for beginner or intermediate professionals, this is one of the sample projects that are reasonably simple to follow. You’re guided through each step to make it feel intuitive, which is excellent for any student of the field looking for additional hands-on education.

Skills and technologies used:

- Google Cloud Storage and Platform

- BigQuery ML

- Dataflow streaming pipelines

- Streaming analytics

- Cloud data storage

Source code: Cloud Server Anomaly Detection

End-to-End ETL Audiophile Pipeline

Among end-to-end data engineering project ideas, this one is an excellent option for more experienced professionals. You’ll create a data processing pipeline from end to end using raw data from Crinacle’s InEarMonitor and Headphone databases. The end result is a Metabase dashboard, giving you a way to gather critical insights.

Plus, this project dives a bit into data quality assurance. It also involves data from multiple sources, which is beneficial for those looking to continue moving forward in their careers.

Skills and technologies used:

- Extract, transform, and load

- Data scraping

- AWS S3

- AWS Redshift

- AWS RDS

- dbt

- Docker

- Airflow

- Terraform

Source code: End-to-End ETL Audiophile Pipeline

Predict Financial and Political Events

For advanced professionals looking for data engineering examples to explore, this project using PredictIt’s API is a solid choice. It harnesses data from an API as a starting point. Once it’s cross-referenced with other types of data – such as information scraped from social media and news outlets – it’s possible to spot intriguing correlations.

This is one of the more advanced data engineer project ideas because it requires some of your own data collection from different sources to make the most of it. You may also need to develop part of the processing pipeline on your own. However, that also means this data project can go in nearly any direction, and you can combine analysis and visualization if you like.

Skills and technologies used:

- PredictIt API

- Data analytics

- Predictive analytics

- Data storage

- Data warehousing

- Data scraping

- Data modeling

Source code: Predict Financial and Political Events

Big Data for Smart Cities

For a big data project that focuses on smart cities and smart IoT infrastructure, this is one of the data engineer portfolio project examples not to overlook. It presents several scenarios with multiple sources of data, such as traffic information and camera streams. The processed data can support better decision-making, allowing cities to solve many different types of problems.

While there’s a decent walkthrough with this project, it’s critical to remember this isn’t a data engineer project for beginners. Instead, it’s better for professionals with experience in data science or analytics.

Skills and technologies used:

- Big data

- IoT infrastructure analytics

- OpenVisualCloud

- Docker

- Kubernetes

Source code: Big Data for Smart Cities

Image Caption Generation

Companies increasingly publish images online, and many require captions to connect with an audience more effectively. That’s what makes this one of the more intriguing data engineer project examples for those who want to design a project that’s highly relevant in the current landscape.

With this project, you get to tap into neural networks, making it an excellent option for exploring a new skill area. Plus, it includes the use of machine learning and big data algorithms for efficient data analysis and caption generation.

Skills and technologies used:

- Neural network

- Python

- CNN

- RNN

- Beam Search

Source code: Image Caption Generation

Conclusion

Ultimately, embarking on data engineering pet projects is more than just a learning exercise; it's a journey of personal and professional growth, making them ideal for anyone interested in a career in data engineering. Through these projects, beginners can solidify their foundational data engineering skills while advanced practitioners can explore new horizons and tackle complex challenges.

Each project not only enhances your technical capabilities but also boosts your problem-solving skills, creativity, and portfolio, making you a more versatile and sought-after professional in the data engineering landscape. Couple that with reading some of the best books for data engineers, and you can become a data engineer or advance your career with ease.

So, whether you're constructing your first database or deploying a sophisticated machine learning model, remember that every line of code you write and every dataset you wrangle brings you one step closer to mastering the art of data engineering. Let your curiosity lead the way, and may your passion for data drive you toward endless possibilities and innovations.

.png)