Shared Mutable State in Rust: Is It Really the Root of All Evil?

The author of this article is EPAM Systems Engineer Irine Kokilashvili.

Introduction

This article was inspired by Tom Kaitchuck’s YouTube video “Why Rust is a significant development in programming languages.” It is aimed at everyone interested in Rust and software development. I wrote it while learning about the shared mutable state in Rust, and my goal is to share this knowledge.

I extend my heartfelt gratitude to my colleagues Andrey, Ilya, and Anton, who dedicated their time to review and provide valuable feedback for this article.

Garbage collection and memory management

When a computer science problem is successfully resolved, it frequently leads to the emergence of a higher level of abstraction, enabling the development of future programs without a complete understanding of the initial problem. If the problem remains unresolved, it can resurface in a modified manifestation at the next level of abstraction.

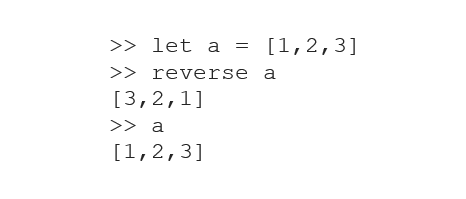

Consider the example of memory management and garbage collection. Garbage collection is a process through which the runtime environment automatically identifies and reclaims memory that is no longer needed by the program. This allows us to focus on application logic without having to worry about memory management.

While the introduction of garbage collector alleviated memory-related issues, it introduced its own challenges, such as potential performance issues. It is worth mentioning the hellish troubles that arise when we combine different garbage collector runtimes. A lot of fun is guaranteed, for example, when integrating Java and Go libraries and making them work in harmony within a Python application.

Rust disadvantages

Even though Rust excels in many areas, it has some disadvantages.

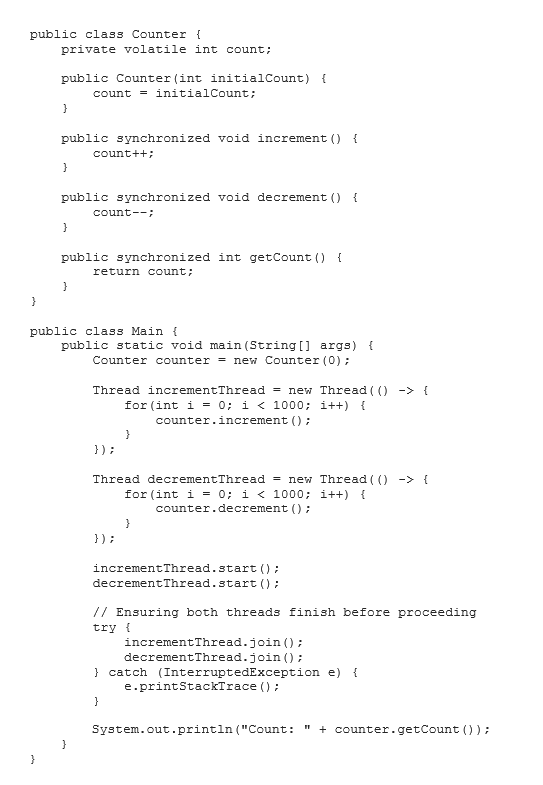

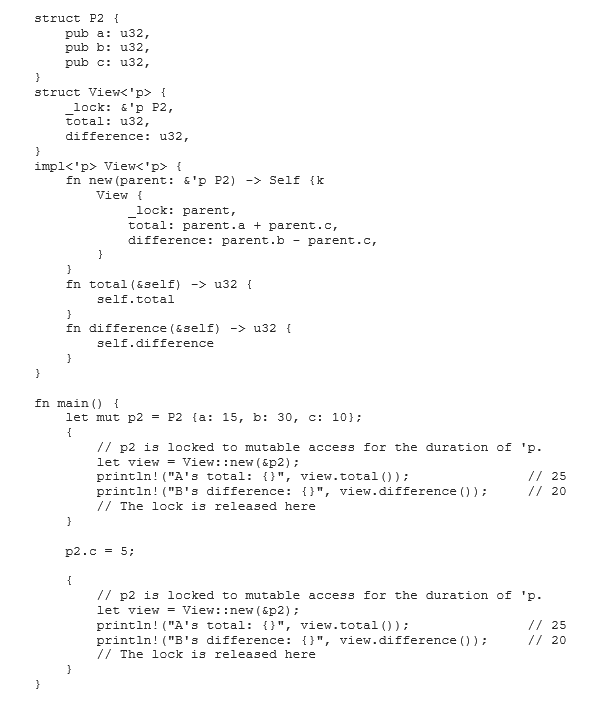

One complexity is associated with asynchronous programming support, which is quite painless in languages with garbage collection. While Rust has asynchronous programming capabilities, the complexity arises from the ownership and borrowing model, which makes handling async operation much more difficult than in other languages.

Rust also has powerful features to deal with shared memory, but when Rust programs interact with external resources or external systems, we need to handle these aspects independently.

In his blog post, When Rust hurts, Roman Kashitsyn writes: “Remember that paths are raw pointers, even in Rust. Most file operations are inherently unsafe and can lead to data races (in a broad sense) if you do not correctly synchronize file access. For example, as of February 2023, I still experience a six-year-old concurrency bug in rustup.”

Conclusion

Rust's commitment to safety and control makes it a valuable programming language choice for systems programming and applications where memory safety is vital. However, developers must be prepared to navigate the nuances of Rust, such as the ownership and borrowing model, asynchronous code, and unsafe code.

The views expressed in the articles on this site are solely those of the authors and do not necessarily reflect the opinions or views of Anywhere Club or its members.

.png)